Protecting access to trustworthy information and content online

When our users place their trust in us, we have an obligation to deliver trustworthy, helpful information that meets their needs. As a result, we invest in the teams and systems needed to keep our platforms safe and constantly evolve the ways we combat harmful and illegal content.

OVERVIEW

Protecting people from harmful and illegal content and making our products safer for everyone is core to the work of many different teams across Google and YouTube. When it comes to the information and content on our platforms, we take our responsibility to safeguard the people and businesses using our products seriously, and to do so with clear, transparent policies and processes.

We also support reasonable and consistent regulation and liability protections that allow us to effectively combat harmful content while protecting the core benefits of online environments, including the ability to find a wide range of viewpoints, access useful information and connect with one another. We are committed to partnering with governments and civil society to help ensure people around the world are able to access trustworthy information and content online.

RECENT NEWS

View moreWORKING TOGETHER

Sharing expertise and resources to help achieve collective goals

FAQ

We are constantly developing and improving the tools, processes, and teams that help us provide access to trustworthy information from quality sources and moderate content across our services. Google and YouTube make significant investments in technology and people to combat harmful content in an effective and consistent way. It’s a complex task, and we are constantly refining our practices while partnering with experts and organizations to learn and inform our approach:

- We detect and quickly remove harmful content to protect users online.

- We deliver reliable information and tools to evaluate the content found online.

- We reward responsible creators by allowing them to monetize their channels and content that is original, engaging and respectful.

- We partner with experts and share technology to create a safer internet.

A smart regulatory framework is essential to enabling an appropriate approach to harmful content. Our practices are informed by four key principles, which could also form a basis for an an effective regulatory framework:

- Shared Responsibility: Tackling illegal content is a societal challenge, one in which companies, governments, civil society, and users all have a role to play. In some cases, content may not be clearly illegal, either because the facts are uncertain or because the legal outcome depends on a difficult balancing act. In turn, courts have an essential role to play in fact-finding and reaching legal conclusions on which platforms can rely.

- Rule of law and creating legal clarity: It’s important to clearly define what platforms can do to fulfill their legal responsibilities, including removal obligations. An online platform that takes other voluntary steps to address illegal content should not be penalized.

- Flexibility to accommodate new technology: While laws should accommodate relevant differences between platforms, given the fast-evolving nature of the sector, laws should be written in ways that address the underlying issue rather than focusing on existing technologies or mandating specific technological fixes.

- Fairness and transparency: Laws should support companies’ efforts to be transparent about their content removals through transparency reports, appropriate notices, and appeals processes that balance different policy goals at stake.

At Google and YouTube, everything we do for kids, teens, and families is created to empower, designed to respect, and built to protect. We build age-appropriate products that give families flexibility to manage their unique relationships with technology and implement policies, protections and programs that help keep every kid and teen safer online. We also have our Youth Principles, which drive YouTube’s ongoing approach to product and policy development for our younger users.

Through Family Link, we allow parents to set up supervised accounts for their children, set screen time limits, and more. Our Be Internet Awesome digital literacy program helps kids learn how to be safe and engaged digital citizens. The dedicated YouTube Kids app is a separate app for our youngest users and offers a safer and simple place where kids can learn and explore their interests; then as children get older, parents have the choice to allow their family to explore more of YouTube with a supervised experience. Kids Space and teacher-approved apps in Play also offer experiences that are customized for younger audiences.

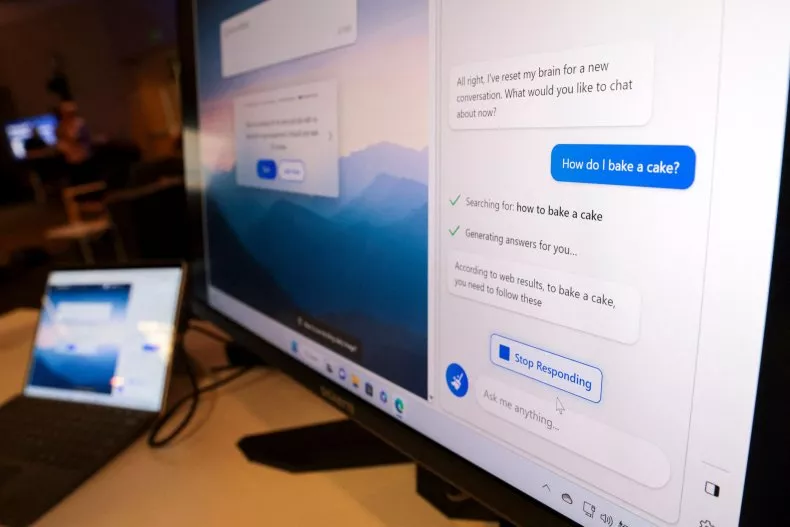

We also think that AI can be a useful learning tool to enable kids to dig deeper into topics and practice new skills. We want them to be able to explore this new technology and provide them the tools and resources to do so responsibly.

We also partner with experts in children’s media, child development, digital learning, and citizenship to weigh in on products, policies and services we offer to young people and families. Together, we work to foster a safe, high quality, helpful platform that enriches the lives of families around the world.

Google and YouTube are committed to fighting child sexual abuse material (CSAM) online and have built a dedicated system to fight child sexual abuse and exploitation on our platforms. We invest heavily in fighting child exploitation online and use our proprietary technology to deter, detect, and remove offenses on our platforms. We also use our technical expertise to develop and share tools to help other organizations, like Facebook, Adobe, and Reddit, detect and remove CSAM from their platforms.

Explore data regarding Google’s global efforts and resources to combat CSAM on our platform and learn more about our work to protect children.

Since 2010, Google has regularly shared the Transparency Report to shed light on how the policies and actions of governments and corporations affect privacy, security, and access to information. Google and YouTube regularly publish Transparency Reports, which include data on content removal requests from governments, content delistings due to copyright, YouTube Community Guidelines enforcement, and political advertising on Google, among others.

Explore the Transparency Report.