Advancing bold and responsible approaches to AI

We aim to unlock the benefits of AI that can help solve society’s biggest challenges, while safeguarding user privacy, security, and safety.

OVERVIEW

Building helpful and safer AI

Building AI that will maximize the benefits for people and society is a collective effort. As part of our AI Principles, which guide us every step of the way in developing AI responsibly, we collaborate with researchers and academia across the industry while engaging with governments and civil society to establish boundaries, like policies and regulation, that can help promote progress while reducing risks of abuse.

Learn more about our latest work at AI.google

RECENT NEWS

View more AI updatesWORKING TOGETHER

We share expertise and resources with other organizations to help achieve collective goals

External Perspective

We are constantly seeking out external viewpoints on public policy challenges

FAQ

We believe AI responsibility is about using AI to help improve people's lives and address social and scientific challenges as well as about avoiding risks. Our AI Principles offer a framework to guide our decisions on research, product design, and development as well as ways to think about solving the numerous design, engineering, and operational challenges associated with any emerging technology.

But, as we know, issuing principles is one thing—applying them is another. We regularly publish progress in our responsibility efforts to apply our AI Principles, share best practices, and continue to engage with researchers, scientists, industry, governments, and people using AI in their daily lives.

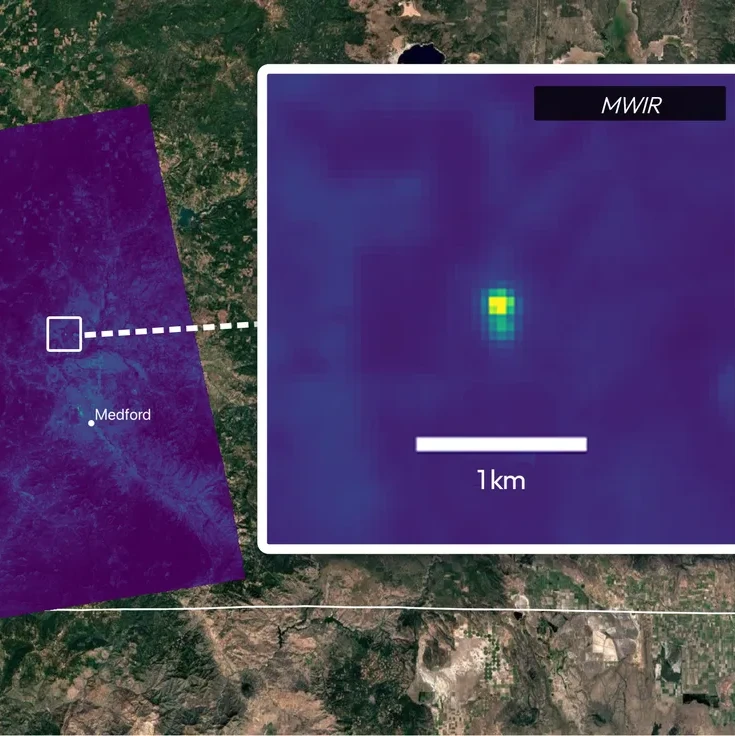

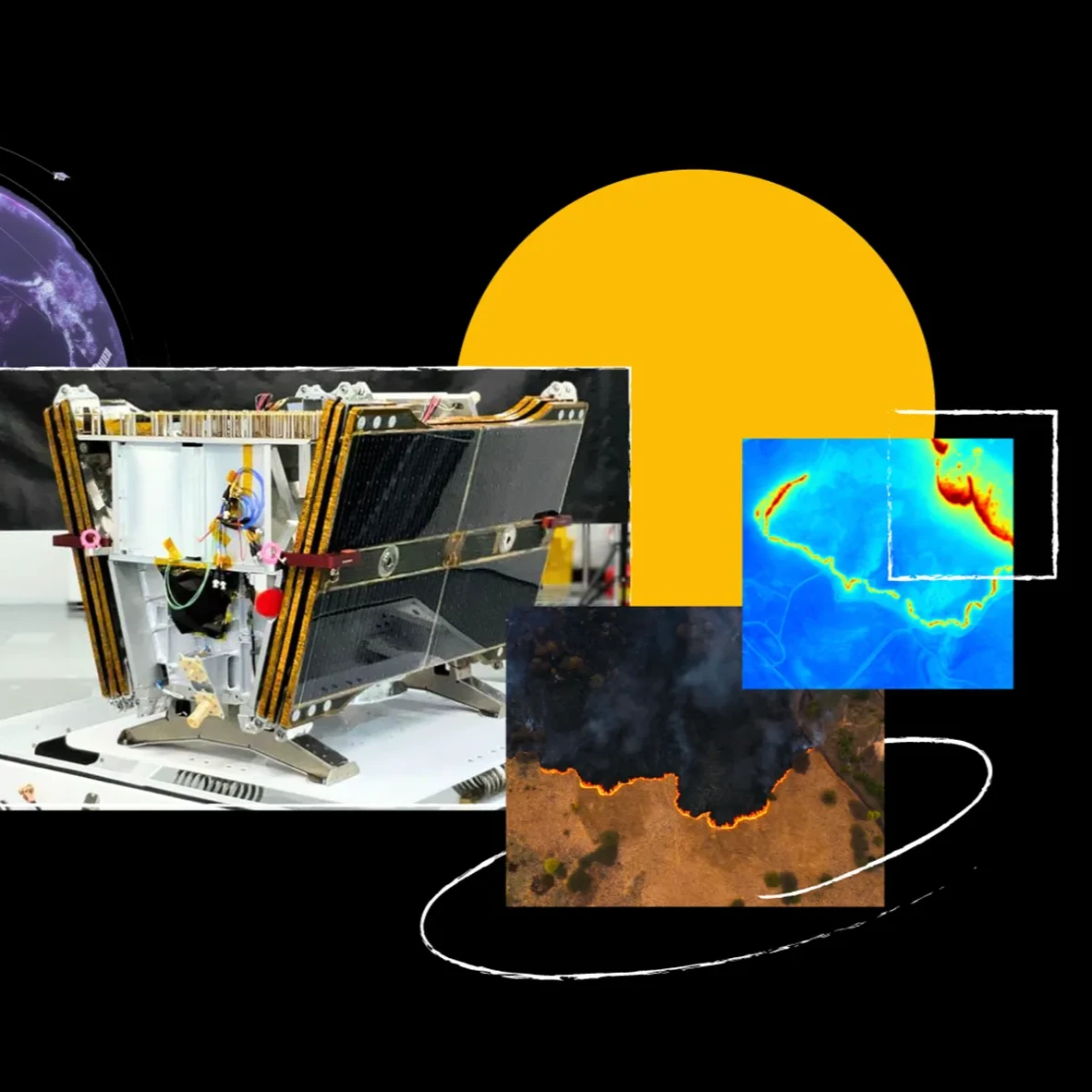

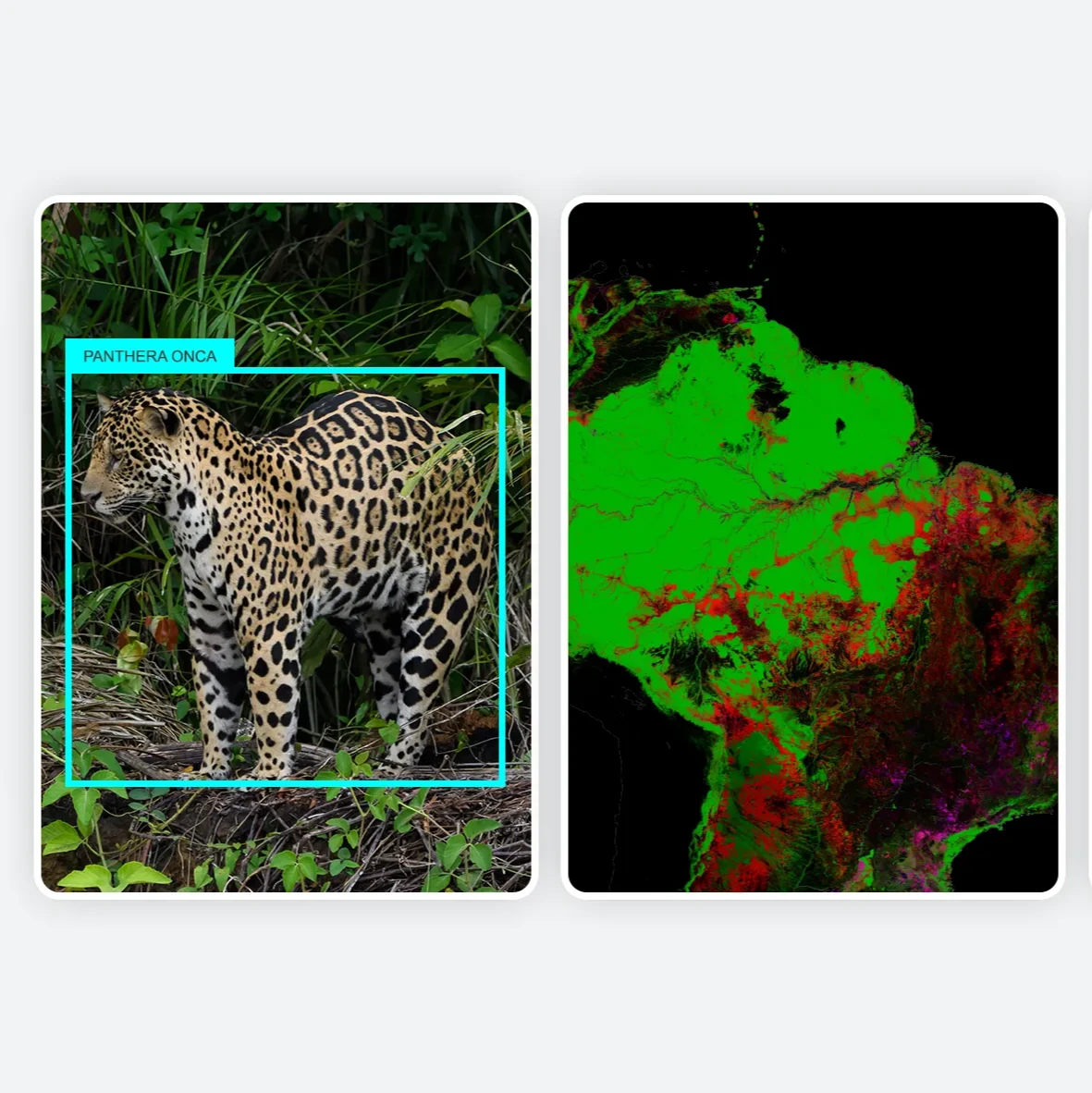

AI opens up new opportunities that could significantly improve billions of lives. We’re dedicated to maximizing AI’s potential across key areas where it can drive tremendous impact – including science, education, the workforce, accessibility, and the public sector.

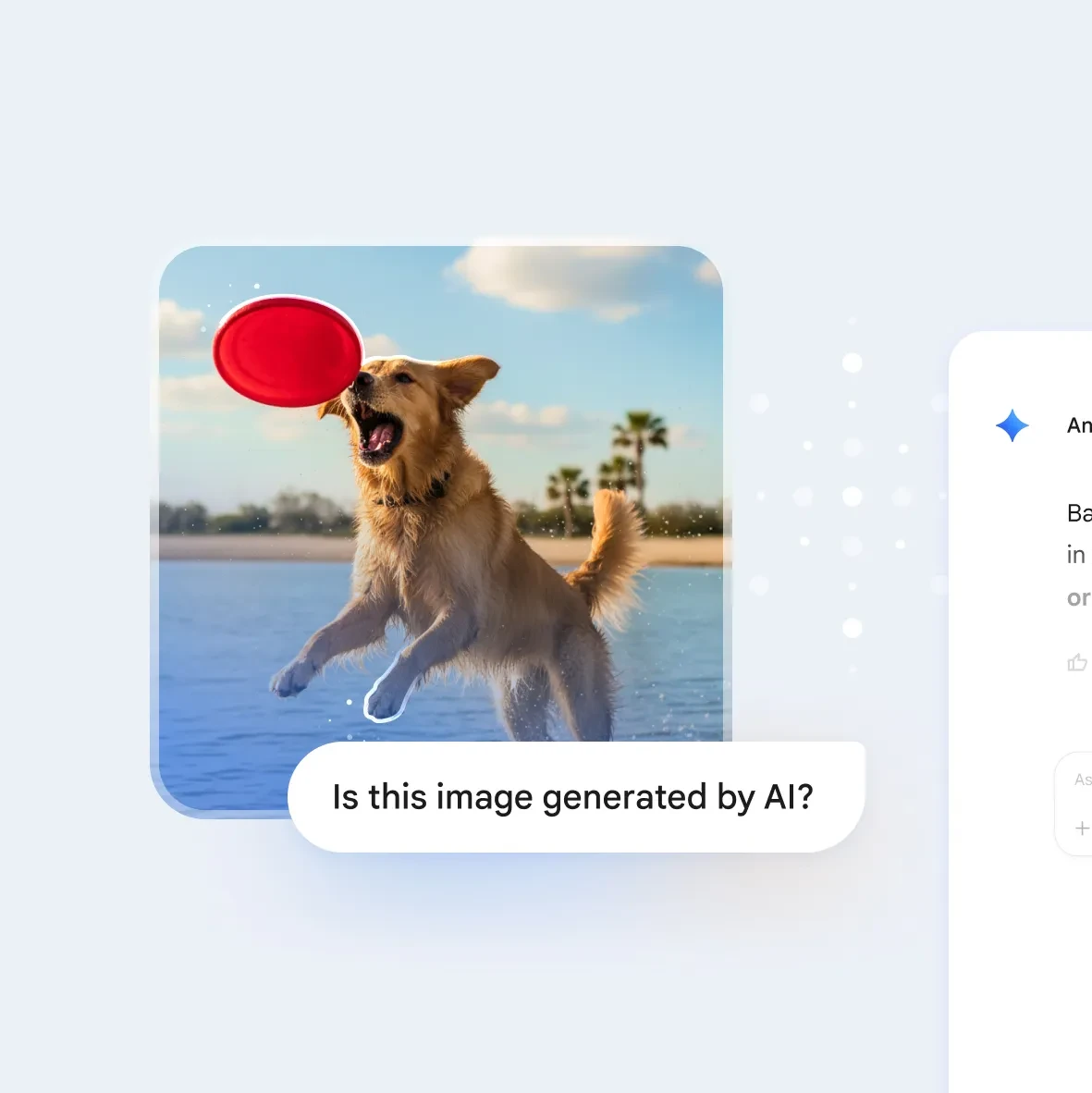

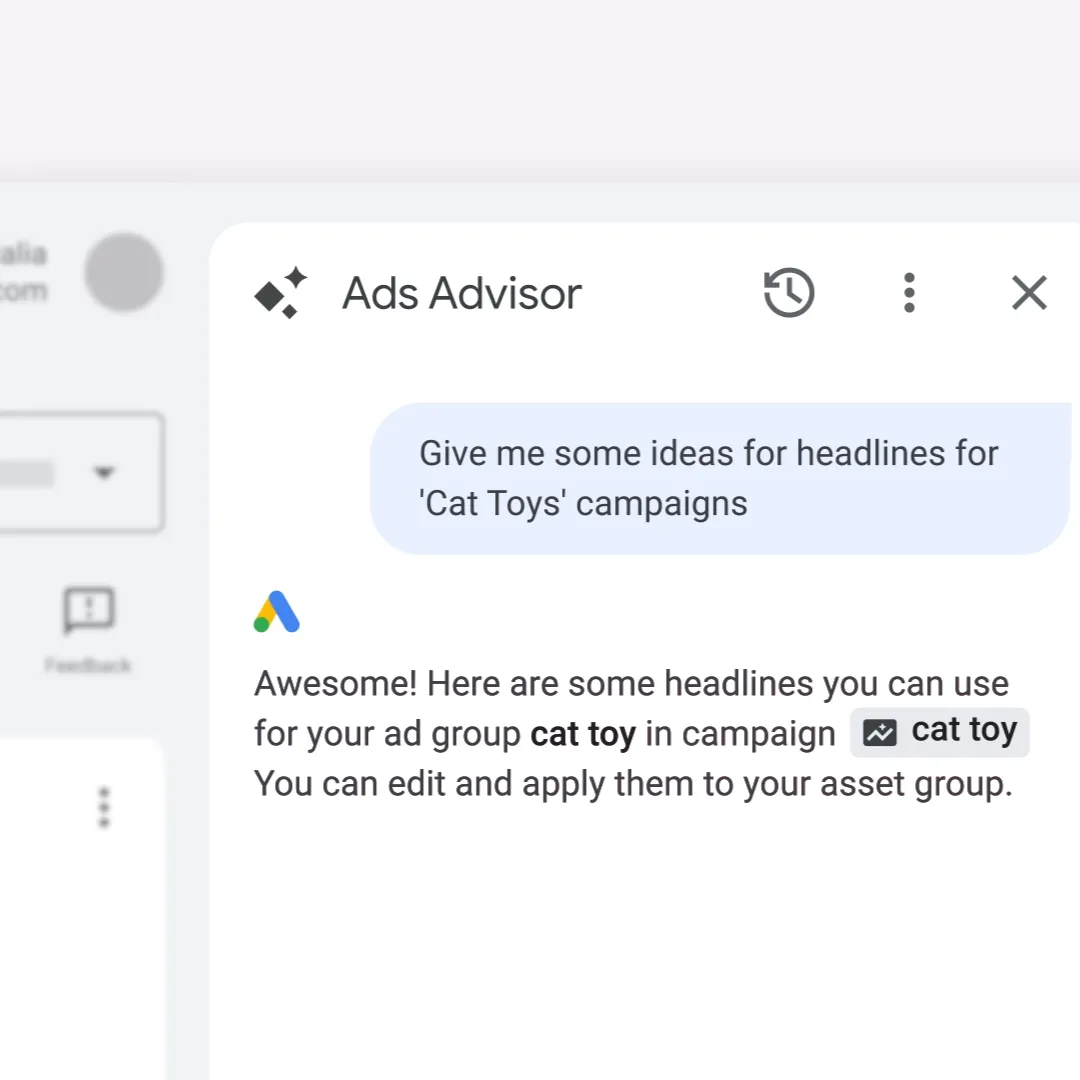

We apply our AI advances to our core products and services to enhance and multiply the usefulness and value of all our core products and services. Billions of people use Google AI when they engage with Google Search, Google Photos, Google Maps, Google Workspace; hardware devices like Pixel and Nest; and accessibility applications like Android Voice Access, Live Transcribe, and Project Relate. We’re excited about the potential for AI to continue to make our products even more useful and transformative.

No single company can progress this approach alone. AI responsibility is a collaborative exercise that requires bringing multiple perspectives to the table to help ensure balance.

That’s why we’re committed to working in partnership with others to get AI right. Over the years, we’ve built communities of researchers and academics dedicated to creating standards and guidance for responsible AI development. We collaborate with university researchers at institutions around the world, and to support international standards and shared best practices, we’ve contributed to ISO and IEC Joint Technical Committee’s standardization program in the area of Artificial Intelligence.

AI will be critical to our scientific, geopolitical, and economic future, enabling current and future generations to live in a more prosperous, healthy, secure, and sustainable world. Governments, the private sector, educational institutions, and other stakeholders must work together to capitalize on AI’s benefits, while simultaneously managing risks.

Tackling these challenges will again require a multi-stakeholder approach to governance. Some of these challenges will be more appropriately addressed by standards and shared best practices, while others will require regulation—for example, requiring high-risk AI systems to undergo expert risk assessments tailored to specific applications. Other challenges will require fundamental research, in partnership with communities and civil society, to better understand potential harms and mitigations. International alignment will also be essential to develop common policy approaches that reflect democratic values and avoid fragmentation.

Our Policy Agenda for Responsible Progress in Artificial Intelligence outlines specific policy recommendations for governments around the world to realize the opportunity presented by AI, promote responsibility and reduce the risk of misuse, and enhance global security.